The CODE4Vision project is a five-year research project funded by a Consolidator Grant from the European Research Council (ERC). Consolidator Grants provide a wonderful and generous source of support for researchers during the early phase of their career as an independent group leader in Europe. For the CODE4Vision project, funding started in 2017, and a major part of the research group worked on this project until the end of the project in 2022.

In the project, we investigated the neural circuitry of the retina, in particular the connection between bipolar cells and ganglion cells, through a combination of experiments and computational analyses. We then used this knowledge to build models of ganglion cell responses under natural stimulation. Furthermore, we aimed at applying these models for developing strategies of artificial retina stimulation (for example, via inserted optogenetic light sensors) that may recreate natural activity patterns. These strategies will support the development of therapies for patients with advanced retinal degeneration, aiming at restoring at least a partial sense of vision through retinal implants or optogenetic treatments.

The text below describes the motivation and scientific background of the project and collects results that have been obtained during the project.

Motivation

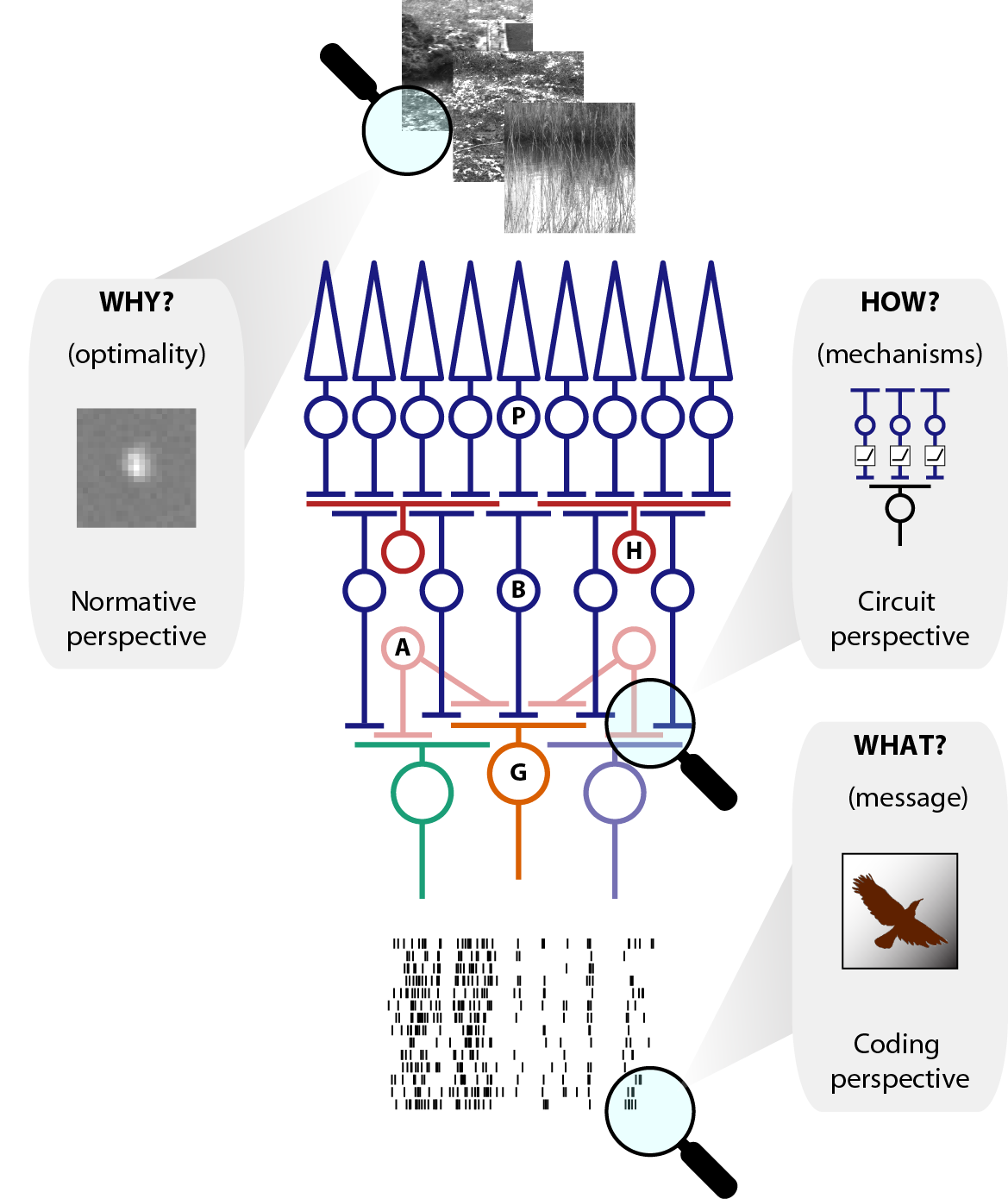

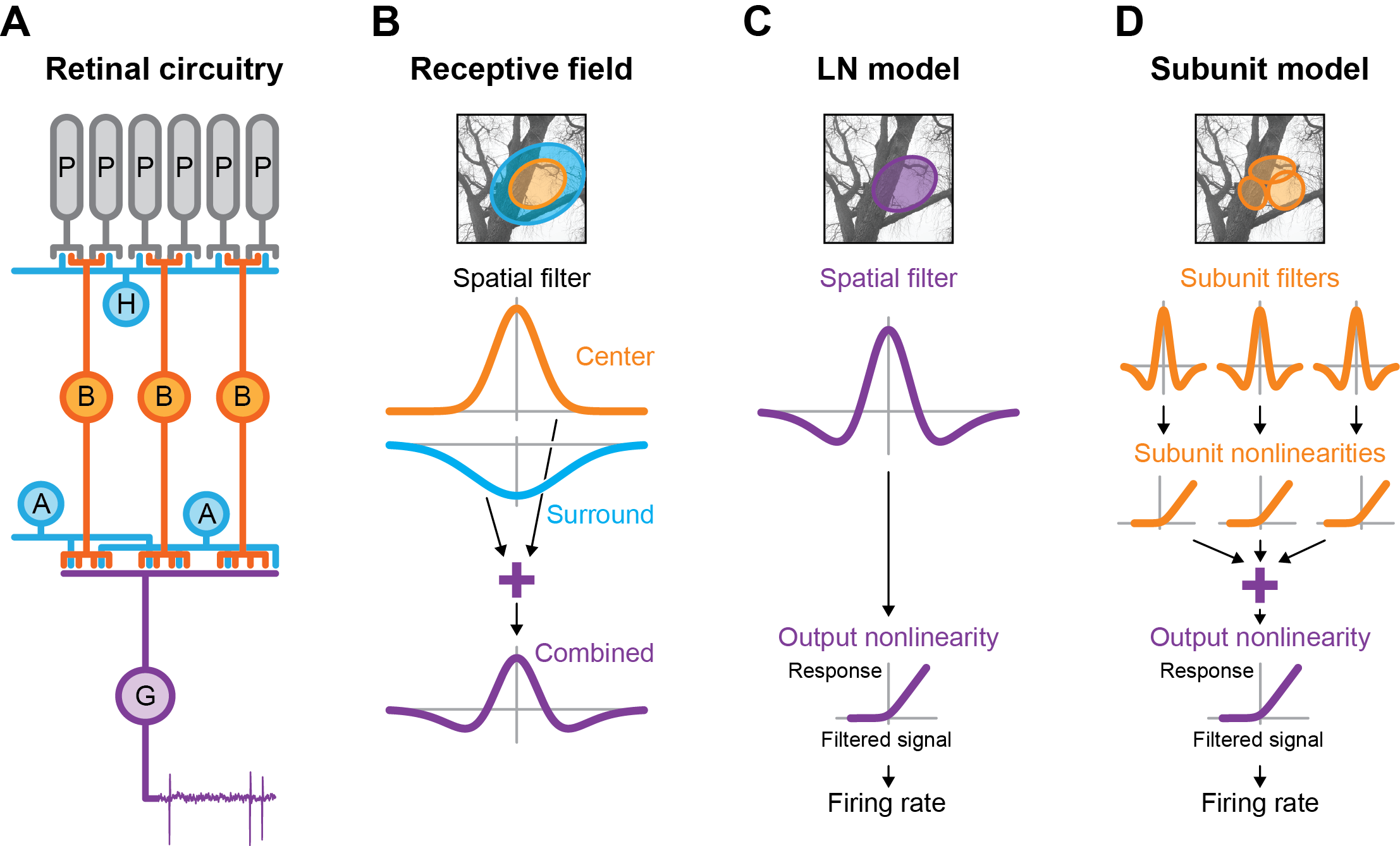

When light enters the eye, it falls onto a thin layer of neuronal tissue at the back of the eyeball – the retina. Here, light is taken up by photoreceptors and converted into electrical signals. These are then processed by an interconnected network of neurons and finally transformed into sequences of electrical pulses (so-called “action potentials” or “spikes”) in the output neurons of the retina, the retinal ganglion cells. The ganglion cells send this electrical activity to various downstream areas in the brain. Thus, all visual information of the outside world received by the brain must be encoded in the patterns of spikes that occur in the ganglion cells. How this activity represents visual information and how the neural network of the retina achieves this encoding of visual signals are among the fundamental questions about our sense of vision.

Moreover, understanding the representation of visual information in the spike patterns of retinal ganglion cells is also of concrete importance for the development of vision restoration therapies for patients suffering from loss of photoreceptors. About a million people worldwide are affected by inherited degeneration of photoreceptors, a condition known as retinitis pigmentosa, which results in a progressive loss of vision, ultimately leading to blindness. No cure is currently available to prevent or halt the photoreceptor degeneration. Thus, much effort is devoted towards developing artificial ways of restoring vision. This may happen through retinal prostheses that electrically activate surviving nerve cells in the retina via an inserted electronic chip or through restoring light sensitivity via inserted light-sensitive proteins. In both cases, best functional restoration of vision is to be expected when the artificial stimulation can recreate the natural activity patterns as closely as possible. In order to find appropriate stimulation strategies, mathematical models that describe how the retina translates natural as well as artificial stimulation into activity patterns will be a valuable tool.

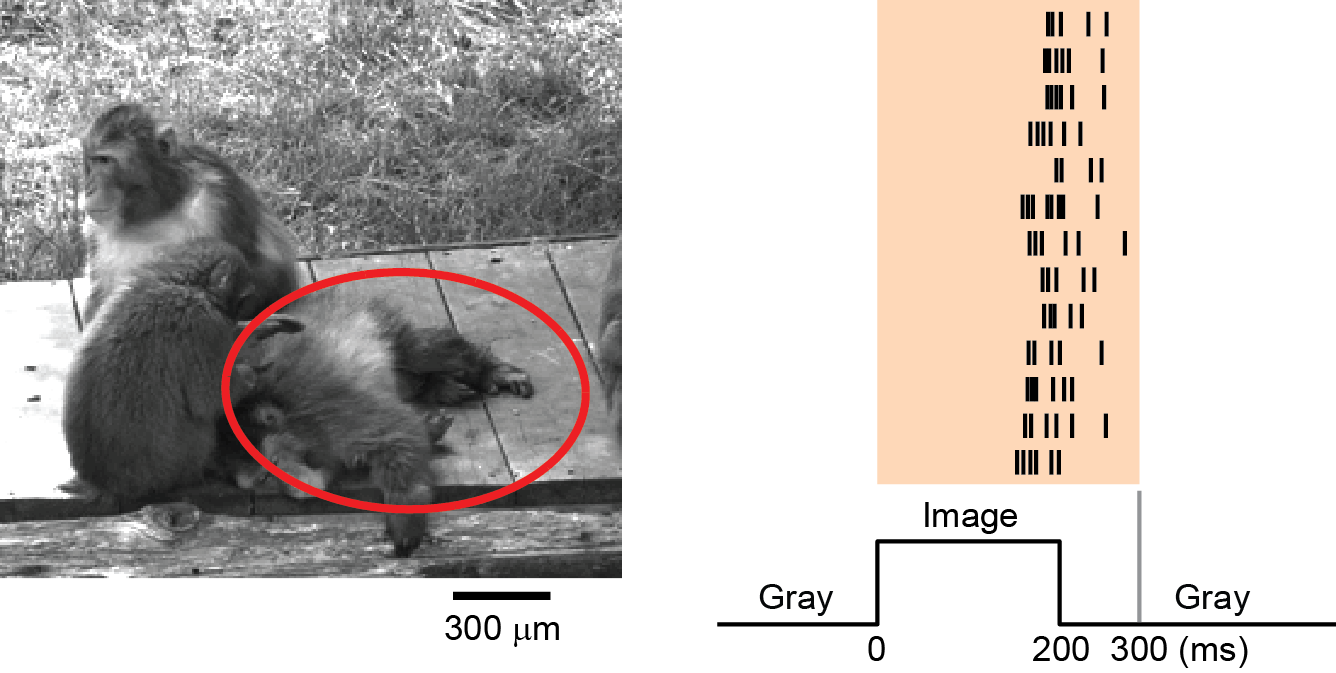

In the CODE4Vision project, we therefore aim at understanding visual signal processing in the retinal network under natural visual stimulation as well as under artificial stimulation through inserted light-sensitive proteins. To do so, we record the spikes of retinal ganglion cells in isolated retinal tissue with miniature electrodes while stimulating the retina with various light patterns. A key step for analyzing how the retina processes these light patterns is to identify the collection of inputs that a given ganglion cell receives from other neurons in the retinal network. The individual inputs are not readily observable, and we therefore develop mathematical techniques, based on methods from computational statistics and machine learning, in order to infer, for example, how many excitatory neurons send their signals to a given ganglion cell and where these input neurons are located. Based on the identified input layout, we can then investigate the signal transmission between the input neurons and the ganglion cells and build mathematical models that capture how the retina responds to natural as well as artificial stimulation.

Major Outcomes

In order to record spikes from large populations of retinal ganglion cells, we have established a new recording method in the lab where we use semiconductor chips with more than 4,000 recording sites. We have applied this to simultaneously obtain data from several hundred ganglion cells in retinal tissue obtained from mice or salamanders. We have also developed a novel method to identify the layout of excitatory inputs that feed into the recorded ganglion cells. This method is based on recording spikes under stimulation with finely structured spatial light patterns. We then analyze which fundamental components in these spatial patterns contribute to activating a ganglion cell (using a mathematical technique known as “non-negative matrix factorization”) and find that these fundamental components correspond to individual excitatory input neurons.

Based on this information about input signals into ganglion cells, we have furthermore studied how signals are transformed when a ganglion cell pools its inputs and how this knowledge about the layout of inputs and their transformations can help us understand how ganglion cells respond to natural stimuli. Particular questions here have been how ganglion cells combine signals over space or over different color channels and how they adapt when visual contrast changes in part of a scene, such as when objects shift around or when the illumination conditions change. Studying these questions, we have found that different types of ganglion cells in the retina have different stimulus integration and different adaptation properties. Some cells combine signals over space or colors linearly, other perform complex computational operations when combining signals. With respect to adaptation, some specific cells tend to represent primarily the object with strongest contrast in their field of view, whereas others remain sensitive to other locations in their field of view and can thereby still respond to changes that happen at other positions than where the strongest contrast occurs.

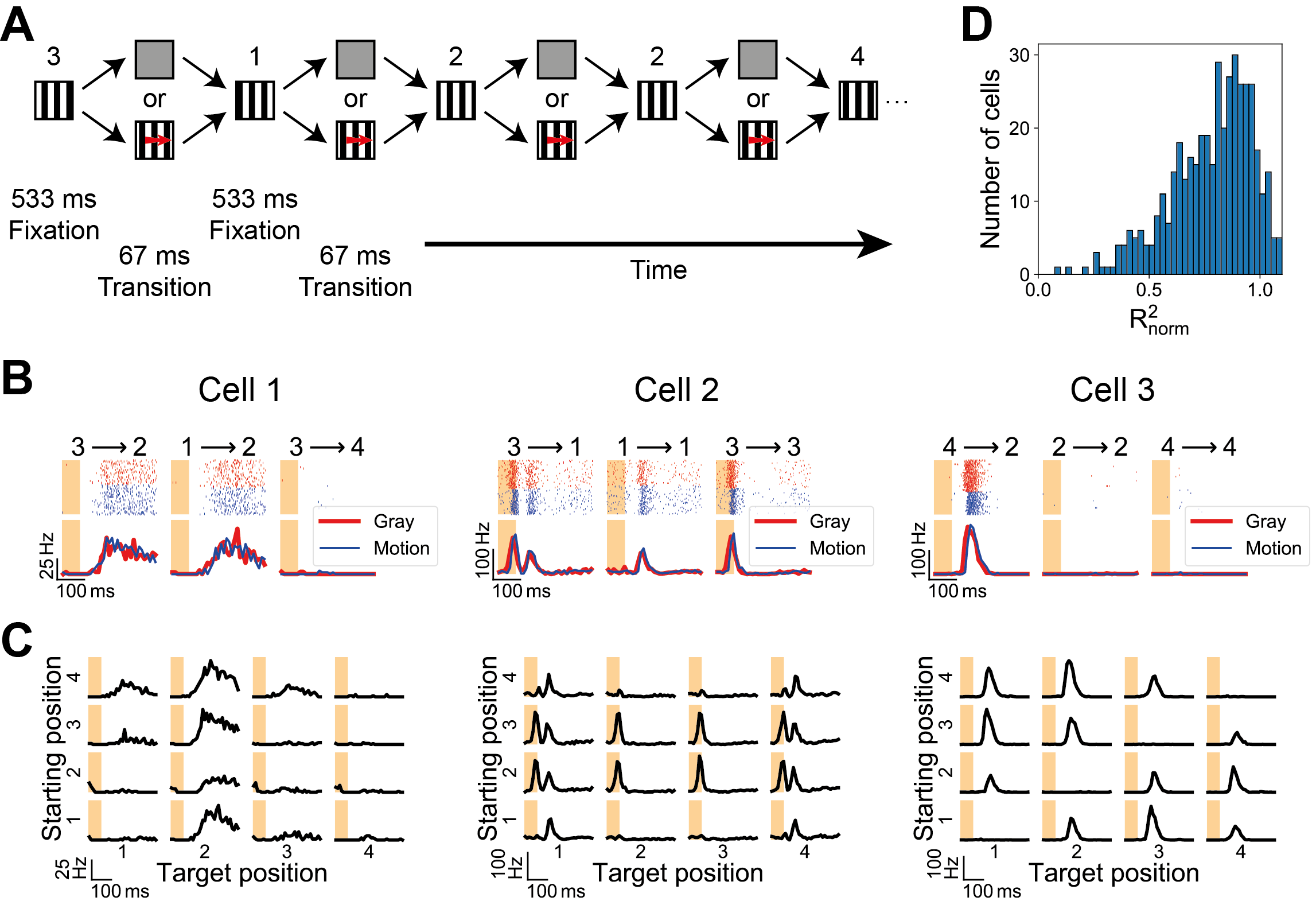

In parallel, we have studied how different aspects of natural vision affect the activity of ganglion cells. In particular, we have investigated how ganglion cells that are sensitive to particular motion directions of visual patterns respond when images move as they do under eye movements. Here, we have observed that it is not the responses of individual ganglion cells that are informative about the motion direction, but the information rather lies in the relative activity among multiple ganglion cells. This suggests that artificial stimulation should aim at preserving the relative levels of activation between different ganglion cells.

Finally, we have investigated retinas with artificial light sensitivity in neurons that are not photoreceptors. We have adjusted our visual stimulation equipment to obtain the required high light intensities that activate the artificial light sensors that have been inserted into the retina. This has allows us to now compare how individual ganglion cells respond to the natural stimulation of photoreceptors and to the artificial stimulation of the inserted light-sensitive proteins. We have found that ganglion-cell specific response properties, like the temporal extent of evoked activity, are retained under artificial stimulation, whereas other aspects, like the timing of response onsets and the sensitivity to complex stimuli, are altered, but can be re-adjusted by appropriately transforming visual stimuli prior to activating the optogenetic constructs.

Publications from the project

Krüppel et al, Journal of Neuroscience 2023

Diversity of ganglion cell responses to saccade-like image shifts in the primate retina. Saccades are a fundamental part of natural vision. They interrupt fixations of the [...]

Karamanlis, Schreyer, and Gollisch, Annual Review of Vision Science 2022

Retinal encoding of natural scenes. An ultimate goal in retina science is to understand how the neural circuit of the retina processes natural visual scenes. Yet [...]

Zapp, Nitsche, and Gollisch, Trends in Neurosciences 2022

Retinal receptive-field substructure: scaffolding for coding and computation. The center-surround receptive field of retinal ganglion cells represents a fundamental concept for how the retina processes and [...]

Liu et al., PLoS Computational Biology 2022

Simple model for encoding natural images by retinal ganglion cells with nonlinear spatial integration. A central goal in sensory neuroscience is to understand the neuronal signal [...]